Run RayCluster

note

This example demonstrates how to set up KubeRay and run a RayCluster with the YuniKorn scheduler. Here're the pre-requisites:

- This tutorial assumes YuniKorn is installed under the namespace

yunikorn - Use kube-ray version >= 1.2.2 to enable support for YuniKorn gang scheduling

Install YuniKorn

A simple script to install YuniKorn under the namespace yunikorn, refer to Get Started for more details.

helm repo add yunikorn https://apache.github.io/yunikorn-release

helm repo update

helm install yunikorn yunikorn/yunikorn --create-namespace --namespace yunikorn

Setup a KubeRay operator

helm repo add kuberay https://ray-project.github.io/kuberay-helm/

helm repo update

helm install kuberay-operator kuberay/kuberay-operator --version 1.2.2 --set batchScheduler.name=yunikorn

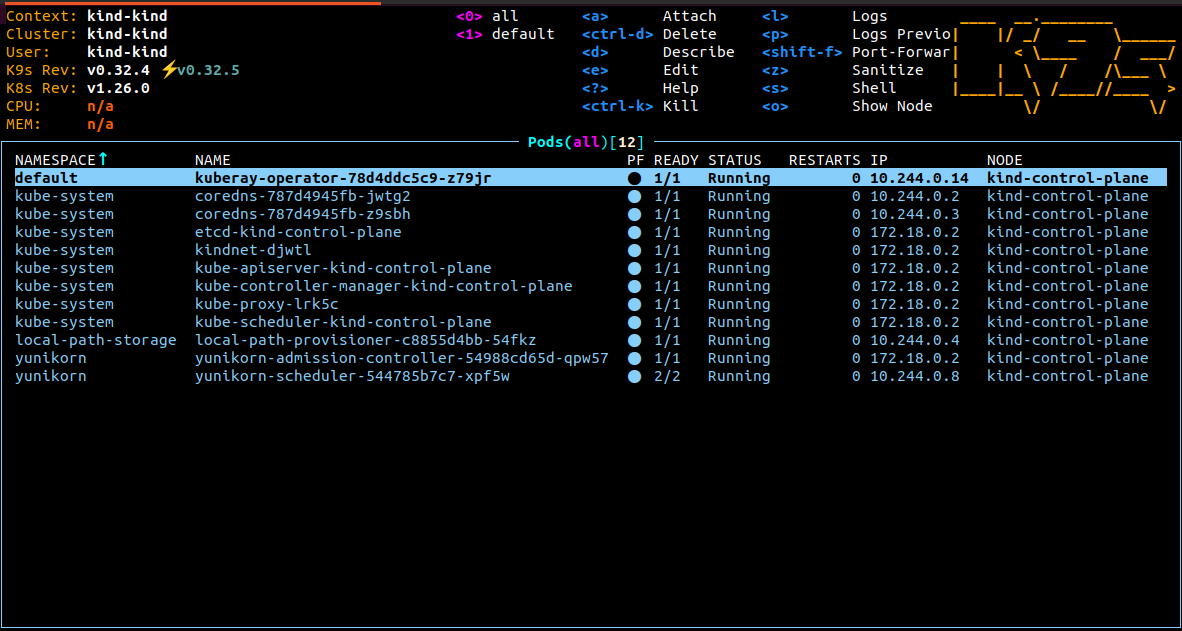

- The result should be as shown below

Create RayCluster with YuniKorn

In the example, we set the ray.io/gang-scheduling-enabled label to true to enable gang scheduling.

- x86-64 (Intel/Linux)

- Apple Silicon(arm64)

cat <<EOF | kubectl apply -f -

apiVersion: ray.io/v1

kind: RayCluster

metadata:

name: test-yunikorn-0

labels:

ray.io/gang-scheduling-enabled: "true"

yunikorn.apache.org/app-id: test-yunikorn-0

yunikorn.apache.org/queue: root.default

spec:

rayVersion: "2.9.0"

headGroupSpec:

rayStartParams: {}

template:

spec:

containers:

- name: ray-head

image: rayproject/ray:2.9.0

resources:

limits:

cpu: "1"

memory: "2Gi"

requests:

cpu: "1"

memory: "2Gi"

workerGroupSpecs:

- groupName: worker

rayStartParams: {}

replicas: 2

minReplicas: 2

maxReplicas: 2

template:

spec:

containers:

- name: ray-head

image: rayproject/ray:2.9.0

resources:

limits:

cpu: "1"

memory: "1Gi"

requests:

cpu: "1"

memory: "1Gi"

EOF

cat <<EOF | kubectl apply -f -

apiVersion: ray.io/v1

kind: RayCluster

metadata:

name: test-yunikorn-0

labels:

ray.io/gang-scheduling-enabled: "true"

yunikorn.apache.org/app-id: test-yunikorn-0

yunikorn.apache.org/queue: root.default

spec:

rayVersion: "2.9.0"

headGroupSpec:

rayStartParams: {}

template:

spec:

containers:

- name: ray-head

image: rayproject/ray:2.9.0-aarch64

resources:

limits:

cpu: "1"

memory: "2Gi"

requests:

cpu: "1"

memory: "2Gi"

workerGroupSpecs:

- groupName: worker

rayStartParams: {}

replicas: 2

minReplicas: 2

maxReplicas: 2

template:

spec:

containers:

- name: ray-head

image: rayproject/ray:2.9.0-aarch64

resources:

limits:

cpu: "1"

memory: "1Gi"

requests:

cpu: "1"

memory: "1Gi"

EOF

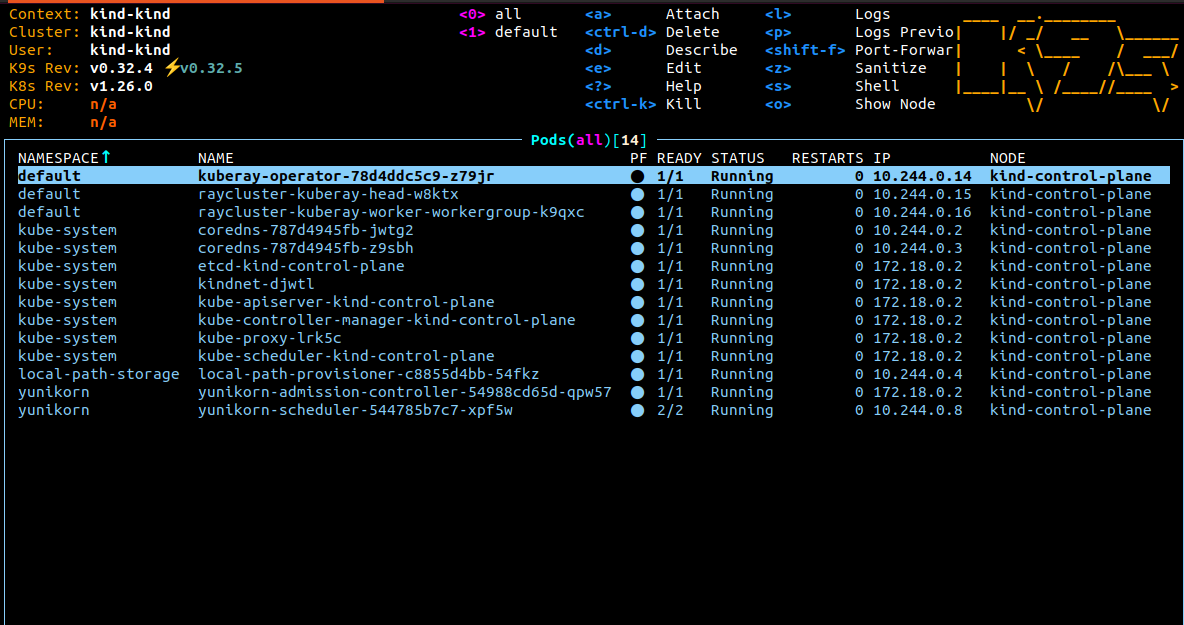

- RayCluster result

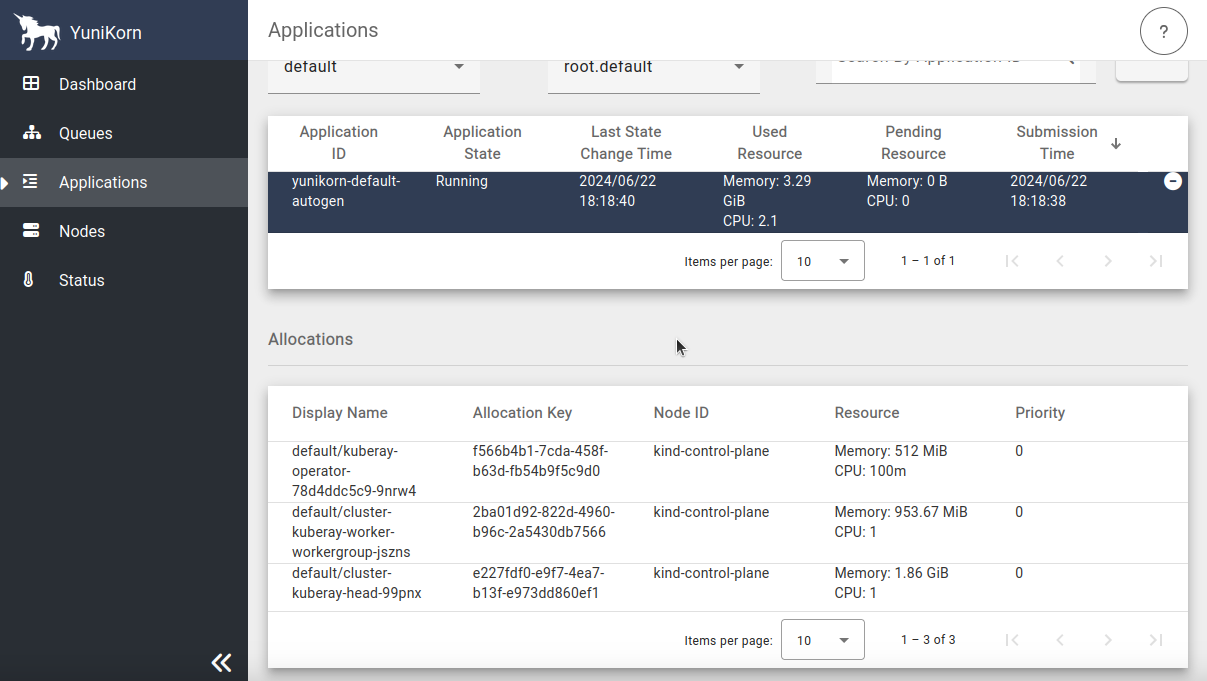

- YuniKorn UI

Submit a RayJob to RayCluster

export HEAD_POD=$(kubectl get pods --selector=ray.io/node-type=head -o custom-columns=POD:metadata.name --no-headers)

echo $HEAD_POD

kubectl exec -it $HEAD_POD -- python -c "import ray; ray.init(); print(ray.cluster_resources())"

Services in Kubernetes aren't directly accessible by default. However, you can use port-forwarding to connect to them locally.

kubectl port-forward service/test-yunikorn-0-head-svc 8265:8265

After port-forward set up, you can access the Ray dashboard by going to http://localhost:8265 in your web browser.

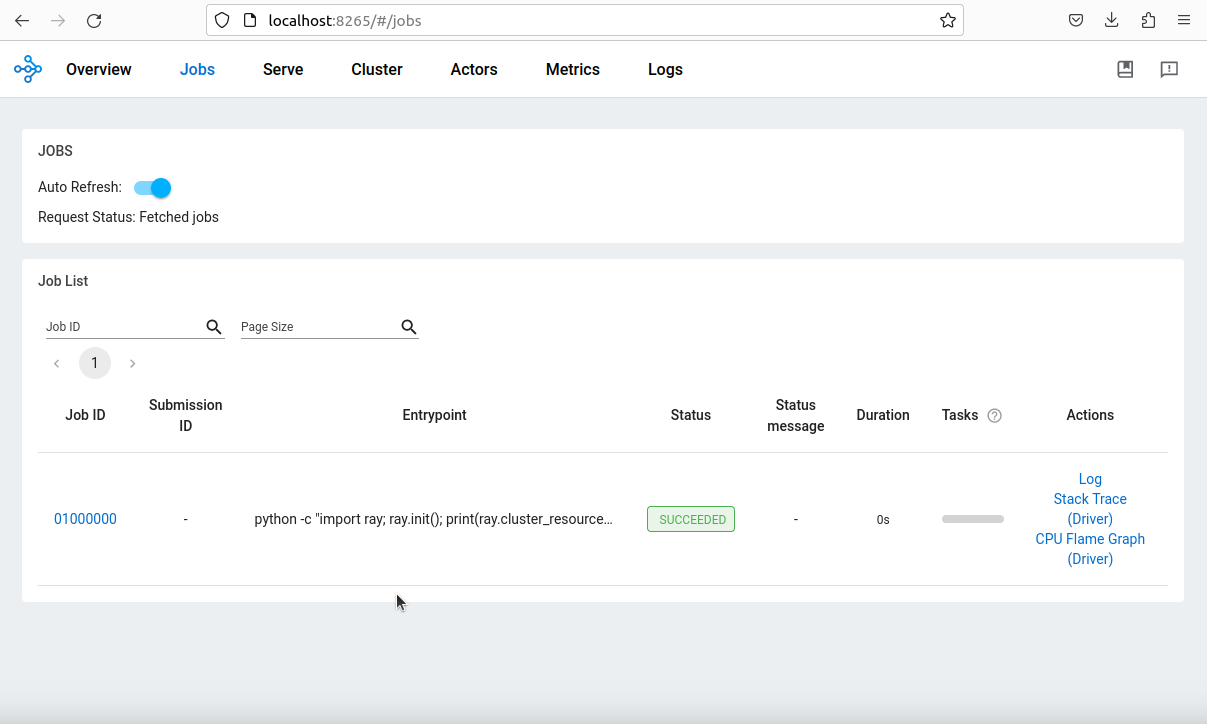

- Ray Dashboard

Have doubts? Check out the KubeRay integration with Apache YuniKorn official documents.