Gang Scheduling

What is Gang Scheduling

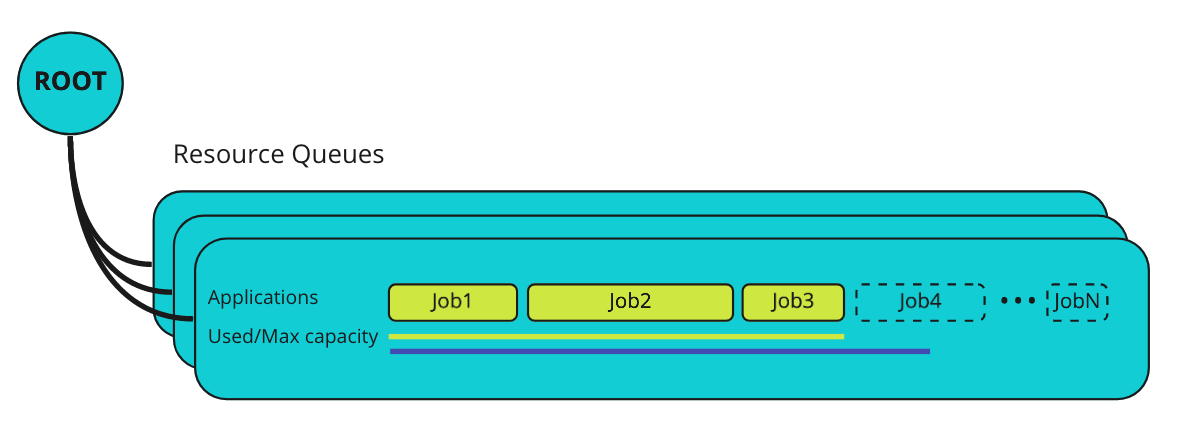

When Gang Scheduling is enabled, YuniKorn schedules the app only when the app’s minimal resource request can be satisfied. Otherwise, apps will be waiting in the queue. Apps are queued in hierarchy queues, with gang scheduling enabled, each resource queue is assigned with the maximum number of applications running concurrently with min resource guaranteed.

Enable Gang Scheduling

There is no cluster-wide configuration needed to enable Gang Scheduling. The scheduler actively monitors the metadata of each app, if the app has included a valid taskGroups definition, it will be considered as gang scheduling desired.

A task group is a “gang” of tasks in an app, these tasks are having the same resource profile and the same placement constraints. They are considered as homogeneous requests that can be treated as the same kind in the scheduler.

Prerequisite

For the queues which runs gang scheduling enabled applications, the queue sorting policy should be set to FIFO.

To configure queue sorting policy, please refer to doc: app sorting policies.

Why the FIFO sorting policy

When Gang Scheduling is enabled, the scheduler proactively reserves resources for each application. If the queue sorting policy is not FIFO-based (StateAware is FIFO based sorting policy), the scheduler might reserve partial resources for each app and causing resource segmentation issues.

Side effects of StateAware sorting policy

We do not recommend using StateAware, even-though it is a FIFO based policy. A failure of the first pod or a long initialisation period of that pod could slow down the processing.

This is specifically an issue with Spark jobs when the driver performs a lot of pre-processing before requesting the executors.

The StateAware timeout in those cases would slow down processing to just one application per timeout.

This in effect will overrule the gang reservation and cause slowdowns and excessive resource usage.

StateAware sorting is deprecated in YuniKorn 1.5.0 and will be removed from YuniKorn 1.6.0.

App Configuration

On Kubernetes, YuniKorn discovers apps by loading metadata from individual pod, the first pod of the app is required to include a full copy of app metadata. If the app does not have any notion about the first or second pod, then all pods are required to carry the same taskGroups info. Gang scheduling requires taskGroups definition, which can be specified via pod annotations. The required fields are:

| Annotation | Value |

|---|---|

| yunikorn.apache.org/task-group-name | Task group name, it must be unique within the application |

| yunikorn.apache.org/task-groups | A list of task groups, each item contains all the info defined for the certain task group |

| yunikorn.apache.org/schedulingPolicyParameters | Optional. A arbitrary key value pairs to define scheduling policy parameters. Please read schedulingPolicyParameters section |

How many task groups needed?

This depends on how many different types of pods this app requests from K8s. A task group is a “gang” of tasks in an app, these tasks are having the same resource profile and the same placement constraints. They are considered as homogeneous requests that can be treated as the same kind in the scheduler. Use Spark as an example, each job will need to have 2 task groups, one for the driver pod and the other one for the executor pods.

How to define task groups?

The task group definition is a copy of the app’s real pod definition, values for fields like resources, node-selector, toleration, affinity and topology spread constraints should be the same as the real pods. This is to ensure the scheduler can reserve resources with the exact correct pod specification.

Scheduling Policy Parameters

Scheduling policy related configurable parameters. Apply the parameters in the following format in pod's annotation:

annotations:

yunikorn.apache.org/schedulingPolicyParameters: "PARAM1=VALUE1 PARAM2=VALUE2 ..."

Currently, the following parameters are supported:

placeholderTimeoutInSeconds

Default value: 15 minutes. This parameter defines the reservation timeout for how long the scheduler should wait until giving up allocating all the placeholders. The timeout timer starts to tick when the scheduler allocates the first placeholder pod. This ensures if the scheduler could not schedule all the placeholder pods, it will eventually give up after a certain amount of time. So that the resources can be freed up and used by other apps. If non of the placeholders can be allocated, this timeout won't kick-in. To avoid the placeholder pods stuck forever, please refer to troubleshooting for solutions.

gangSchedulingStyle

Valid values: Soft, Hard

Default value: Soft. This parameter defines the fallback mechanism if the app encounters gang issues due to placeholder pod allocation. See more details in Gang Scheduling styles section

More scheduling parameters will added in order to provide more flexibility while scheduling apps.

Example

The following example is a yaml file for a job. This job launches 2 pods and each pod sleeps 30 seconds.

The notable change in the pod spec is spec.template.metadata.annotations, where we defined yunikorn.apache.org/task-group-name

and yunikorn.apache.org/task-groups.

apiVersion: batch/v1

kind: Job

metadata:

name: gang-scheduling-job-example

spec:

completions: 2

parallelism: 2

template:

metadata:

labels:

app: sleep

applicationId: "gang-scheduling-job-example"

queue: root.sandbox

annotations:

yunikorn.apache.org/task-group-name: task-group-example

yunikorn.apache.org/task-groups: |-

[{

"name": "task-group-example",

"minMember": 2,

"minResource": {

"cpu": "100m",

"memory": "50M"

},

"nodeSelector": {},

"tolerations": [],

"affinity": {},

"topologySpreadConstraints": []

}]

spec:

schedulerName: yunikorn

restartPolicy: Never

containers:

- name: sleep30

image: "alpine:latest"

command: ["sleep", "30"]

resources:

requests:

cpu: "100m"

memory: "50M"

When this job is submitted to Kubernetes, 2 pods will be created using the same template, and they all belong to one taskGroup: “task-group-example”. YuniKorn will create 2 placeholder pods, each uses the resources specified in the taskGroup definition. When all 2 placeholders are allocated, the scheduler will bind the real 2 sleep pods using the spot reserved by the placeholders.

You can add more than one taskGroups if necessary, each taskGroup is identified by the taskGroup name, it is required to map each real pod with a pre-defined taskGroup by setting the taskGroup name. Note, the task group name is only required to be unique within an application.

Enable Gang scheduling for Spark jobs

Each Spark job runs 2 types of pods, driver and executor. Hence, we need to define 2 task groups for each job. The annotations for the driver pod looks like:

annotations:

yunikorn.apache.org/schedulingPolicyParameters: "placeholderTimeoutInSeconds=30 gangSchedulingStyle=Hard"

yunikorn.apache.org/task-group-name: "spark-driver"

yunikorn.apache.org/task-groups: |-

[{

"name": "spark-driver",

"minMember": 1,

"minResource": {

"cpu": "1",

"memory": "2Gi"

},

"nodeSelector": {},

"tolerations": [],

"affinity": {},

"topologySpreadConstraints": []

},

{

"name": "spark-executor",

"minMember": 1,

"minResource": {

"cpu": "1",

"memory": "2Gi"

}

}]

The TaskGroup resources must account for the memory overhead for Spark drivers and executors. See the Spark documentation for details on how to calculate the values.

For all the executor pods,

annotations:

# the taskGroup name should match to the names

# defined in the taskGroups field

yunikorn.apache.org/task-group-name: "spark-executor"

Once the job is submitted to the scheduler, the job won’t be scheduled immediately. Instead, the scheduler will ensure it gets its minimal resources before actually starting the driver/executors.

Gang scheduling Styles

There are 2 gang scheduling styles supported, Soft and Hard respectively. It can be configured per app-level to define how the app will behave in case the gang scheduling fails.

Hard style: when this style is used, we will have the initial behavior, more precisely if the application cannot be scheduled according to gang scheduling rules, and it times out, it will be marked as failed, without retrying to schedule it.Soft style: when the app cannot be gang scheduled, it will fall back to the normal scheduling, and the non-gang scheduling strategy will be used to achieve the best-effort scheduling. When this happens, the app transits to the Resuming state and all the remaining placeholder pods will be cleaned up.

Default style used: Soft

Enable a specific style: the style can be changed by setting in the application definition the ‘gangSchedulingStyle’ parameter to Soft or Hard.

Example

apiVersion: batch/v1

kind: Job

metadata:

name: gang-app-timeout

spec:

completions: 4

parallelism: 4

template:

metadata:

labels:

app: sleep

applicationId: gang-app-timeout

queue: fifo

annotations:

yunikorn.apache.org/task-group-name: sched-style

yunikorn.apache.org/schedulingPolicyParameters: "placeholderTimeoutInSeconds=60 gangSchedulingStyle=Hard"

yunikorn.apache.org/task-groups: |-

[{

"name": "sched-style",

"minMember": 4,

"minResource": {

"cpu": "1",

"memory": "1000M"

},

"nodeSelector": {},

"tolerations": [],

"affinity": {},

"topologySpreadConstraints": []

}]

spec:

schedulerName: yunikorn

restartPolicy: Never

containers:

- name: sleep30

image: "alpine:latest"

imagePullPolicy: "IfNotPresent"

command: ["sleep", "30"]

resources:

requests:

cpu: "1"

memory: "1000M"

Verify Configuration

To verify if the configuration has been done completely and correctly, check the following things:

- When an app is submitted, verify the expected number of placeholders are created by the scheduler. If you define 2 task groups, 1 with minMember 1 and the other with minMember 5, that means we are expecting 6 placeholder gets created once the job is submitted.

- Verify the placeholder spec is correct. Each placeholder needs to have the same info as the real pod in the same taskGroup. Check field including: namespace, pod resources, node selector, toleration, affinity and topology spread constraints.

- Verify the placeholders can be allocated on correct type of nodes, and verify the real pods are started by replacing the placeholder pods.

Troubleshooting

Please see the troubleshooting doc when gang scheduling is enabled here.